When ChatGPT was launched in November 2022 it triggered the Artificial Intelligence (AI) revolution that is transforming every industry and changing the way we work. Among its numerous capabilities, AI offers the ability to enhance efficiency and productivity in manufacturing, develop advancements in medical research, and improve creative tasks like writing this blog.

AI is usually broken into two main categories: Generative and Predictive. Generative (GenAI) uses existing data as a basis to create new original content such as conversational speech, written articles, images, or new products. Predicative AI, as its name implies, is used to make predictions based on data trends to improve decision making. GenAI is often used in marketing, design, and medicine. Predictive AI is used in market analysis, preventive maintenance, and finance.

While data centers house AI equipment, they can also benefit from these powerful systems. The advanced algorithms and machine learning capabilities of Predictive AI can analyze massive amounts of data in real-time, allowing data centers to become increasingly intelligent, and adaptive. They will be able to anticipate and address potential issues before they occur, ensuring maximum uptime and reliability – what data center customers value most.

AI is expected to contribute $15.7 trillion to the global economy by 2030 (source: PwC)

New GPUs Fuel AI Growth and a Lot of Changes

I was fortunate enough to recently attend NVIDIA GTC and OFC in March and DC World in April. All of the shows supplied a rich menu of new information and AI dominated most conversations. NVIDIA GTC provided a good look at the newest and most powerful GPU technology, OFC introduced the latest in high-speed networking, and DC World provided an outlook on how all of this will affect data center requirements.

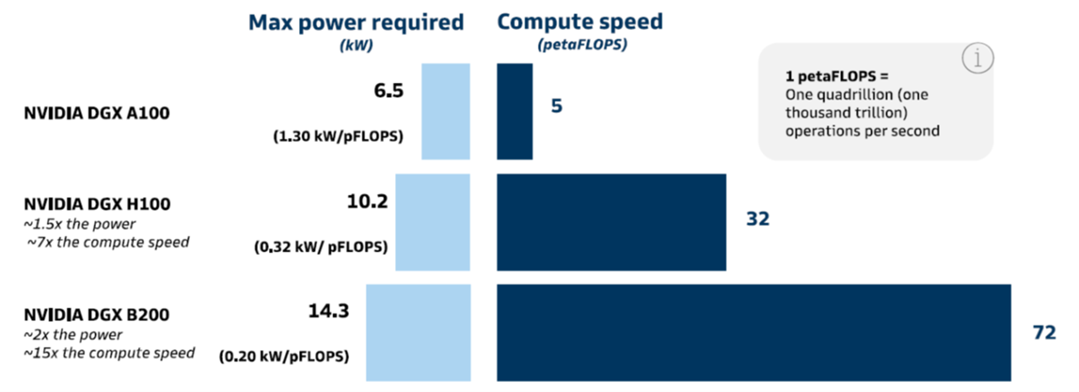

After several years of introducing a new GPU every two years, NVIDIA did so again in 2024 with the new Blackwell processor which offers a significant improvement over its predecessors. The below figure shows both the increase in power and the increase in compute speed for three different NVIDIA servers each with 8 GPUs.

While it may take some time before products using Blackwell show up in the market, it has already given data center companies a view into what infrastructure they are going to need to have in place. A full rack of four DGX B200 will need 60kW but the higher-end NVL72 SuperPod which utilizes the GB200 Superchip will be as much as 120kW and requires direct-to-chip liquid cooling. NVIDIA also announced their next GPU called Rubin which is potentially scheduled for release in 2026 will be designed to optimize power efficiency.

At DC World, Ali Fenn, President of Lancium, stated “AI will be the largest capital formation in history” and noted that the supply of power, more than the GPU technology, will control how fast it can grow. There were already concerns about the supply of power before the advent of AI when an average rack only needed 10kW, but it has taken on a whole new sense of urgency now that rack power may require 12X more.

The increase in the number of AI systems in data centers and their high-power usage per rack is speeding up the need for liquid cooling. This was evident at the exhibition floor at DC world which featured over 10 liquid cooling companies with many being startups less than two years old. While there are several differing opinions on when liquid cooling is required, it is commonly felt that it should be used when rack power is greater than 40kW. The addition of liquid cooling systems to an existing data center is not trivial and one manager told me he was expecting to have to hire several plumbers for his permanent staff.

10% of all data center power will be used for AI by 2025 (source: New York Times)

In order to connect all of the GPUs to create a cluster (or pod) needed for larger-scale AI, fiber cabling and high-speed optical transceivers are required. With up to 8 GPUs per server each running at data rates up to 800G many data centers will see a 4X increase in the number of fiber cables. This in turn adds to the congestion in the cabling pathways and often leads to the need for wider pathways such as 24”or 36” widths and multiple layers if overhead ceiling space allows.

In addition, data centers will need to be designed for scalability and flexibility to handle AI workloads. High-speed, low-latency networks are crucial for efficient AI data processing, along with robust storage systems and advanced data management techniques. Data center operators will also be under even more pressure to mitigate the environmental impact of their operations and the use of renewable energy sources will be a must. Due to the limitations of the power grid in many areas of the world, even nuclear power is getting serious attention as a way to reduce carbon footprint while also adding an additional source of power. Scalability, flexibility, security, and environmental sustainability are all essential factors to consider when designing and operating AI data centers.

New AI Data Centers Will Require New Environments

AI is affecting all markets, but it is not an exaggeration to say its biggest impact is being felt by those in the business of building data centers. The combination of all of these requirement changes has convinced many data center companies that retrofitting existing data centers is not always viable. New buildings located in areas with plentiful and inexpensive power will have to be built. The new AI data centers will be purpose built with liquid (water or dielectric fluid) cooling systems, wider cabling pathways, and thicker concrete floors needed to house the heavier AI equipment.

Despite AI’s immense benefits, the transition needed to implement it in data centers presents a lot of challenges.

- More power to accompany the growing data

- Innovative cooling systems

- Up to 4X increase in the amount of fibers used to connect the servers and switches

- Larger overhead pathways

- Designs that figure out how to add capacity without disrupting existing cabling networks

- Improved sustainability

In simplest terms, data center owners need to be able to handle more of everything: more fiber cables, more pathway room, more heat, and most importantly, more power. Despite these obstacles, it is an exciting time to be in the data center business as builds are at an all-time high and still not able to keep up with demand.

We at Panduit take a customer-centric approach to the AI revolution—it is our responsibility and commitment to be a trusted advisor to our customers by helping them navigate their AI journey. We are committed to staying at the forefront of this AI revolution and providing our customers with the innovative solutions and expertise they need to thrive in the evolving data center landscape.

This article provided a broad summary of the future transformations in data center technology, marking the beginning of AI-focused blogs dedicated to guiding data center owners through the preparations necessary for the building of generative AI infrastructure. To keep updated with our latest AI Data Center posts, please subscribe on the top right of the page.

Discover our AI Infographic highlighting the important statistics reshaping the Data Center AI landscape.